Background File Processing with Azure Functions

Processing uploaded files is a pretty common web app feature, especially in business scenarios. You frequently get a request from your users that they want to be able to do some work on some data in Excel, generate a CSV, and upload it into the system through your web application.

If files are small enough, or can be processed quickly, its generally fine to just handle the import within the request. But sometimes you have to do so much processing, or so much database IO that its impractical to run the import as part of the upload process: you need to push that work out to a background job of some sort.

Introduction

Introducing a background job turns the user’s work flow from a synchronous process, into an asynchronous one. This has some benefits: the user doesn’t have to wait, or risk timing out your web server. But it also brings along some challenges: how do you notify them when the import is complete? How do you tell them something went wrong and how to fix it?

At a high level, the pattern for this sort of process can be understood in three main components: the webapp, the job processor, and the notification system.

The user uploads the file into the Web App, which uses some mechanism to asynchronously pass it to the Processor, which consumes the file, and sends a notification to the user through the Notifier.

While these systems are all basically the same at this architectural level, there are dozens of tools and techniques to choose from for each component. You can use dedicated background job processors like Hangire, queue and service bus tools like Rabbit MQ, NServiceBus, or Azure Service Bus. For the notification system you could use Web Sockets, emails, or some kind of polling mechanism to let the user know the job is complete.

One Successful Model

The rest of this article is going to describe a solution we opted to use for a recent project.

Users of this system are able to upload a file generated by a desktop application that specifies all the products they will need manufactured in order to complete their project. The web app takes in the file, contacts an internal SOAP API to get pricing for each item, and then adds the quote to their cart.

This was working fine when orders were small, but as the product became more and more successful, our end users were sending in larger and larger files for pricing. Eventually the web application would time out. Users also got frustrated waiting, and would upload the file a second time hoping it would go through. This just compounded the problem.

The solution we decided on was to implement an asynchronous import process, and since we were already in Azure, we wanted to build the solution on top of services we could easily provision within that environment.

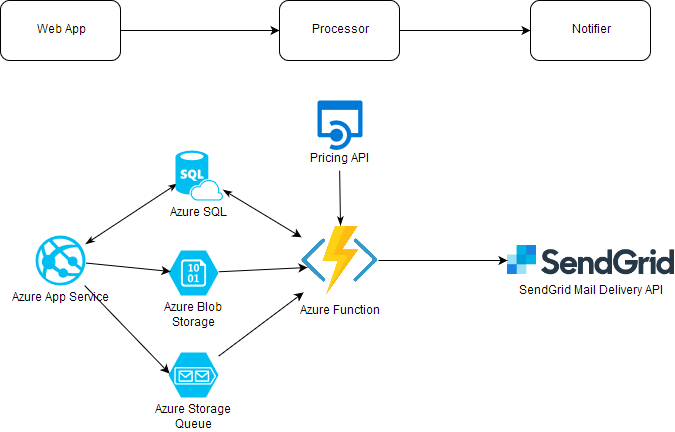

We decided on using an Azure Function for the Processor and SendGrid for the Notifier.

To connect the web app to the Azure function we went with an Azure storage account, specifically Blobs and Queues.

The architecture diagram for this system looks something like this:

The basic flow is:

- User uploads file into the web application. Some rudimentary validation is performed – is this even a valid file?

- Successful files are stored in a Blob Container in the storage account, using a GUID for the name

- A message is pushed into a Queue on the storage account, containing some metadata about the import, and most importantly the GUID that identifies the uploaded file.

- Azure triggers the Function when a new message is added to the Queue. The function downloads the file from Blob storage, and uses data from the shared Database and the Pricing API to calculate a quote

- The function finally stores the quote in the database and generates a PDF with the details, which is then emailed to the customer via SendGrid

The Azure Function integration with Queues is really good. If processing a file crashes, for example if the pricing API went down, Azure notices the function invocation failed, and re-queues the message so it can be processed again. After 5 failures, the messages go into a special dead letters queue where we would be able to analyze the,

Azure functions also have really tight integration with App Insights. We can get real-time logs as files are processed, and set up alerting for increased failure rates.

Relevant Code Samples

Uploading the file and sending the message

// data can come

private void UploadAndSendMessage(HttpPostedFileBase uploadedFile, string email)

{

// a unique name for the uploaded file

var identifier = Guid.NewGuid().ToString("D");

// get the storage account connection string from AppSettings in the web.config

var account = CloudStorageAccount.Parse(ConfigurationManager.AppSettings["StorageAccount"]);

// save the file by copying the input stream to blob storage

var blobClient = account.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("uploaded-files");

var blob = container.GetBlockBlobReference(identifier + ".txt");

blob.UploadFromStream(uploadedFile.InputStream);

// send a message into the queue

var client = account.CreateCloudQueueClient();

var queue = client.GetQueueReference("file-uploaded-queue");

var message = new

{

// who to notify

Email = email,

// a nice name for the generated PDF, so it matches the name of the user's file instead of our GUID

FriendlyName = Path.GetFileNameWithoutExtension(uploadedFile.FileName),

// the GUID

FileIdentifier = identifier,

};

queue.AddMessage(new CloudQueueMessage(JsonConvert.SerializeObject(message)));

}I could have used a dedicated class for the message body, but just chose to serialize an anonymous object.

Azure Function Invocation

Make sure you have the azure tools and azure function tools for visual studio

installed, it will generate a lot of this code for you. Add an Azure Function

project to your solution, and add a C# Function, triggered from a queue. Set the

Connection property to the name of the app setting you will use for the

connection string, and the Path property to the name of the queue.

It will generate you something like this:

public static class Function1

{

[]

public static void Run([QueueTrigger("file-uploaded-queue", Connection = "StorageAccount")]string myQueueItem, TraceWriter log)

{

log.Info($"C# Queue trigger function processed: {myQueueItem}");

}

}Which is a fully working function. To test it, add your storage connection

string to the "StorageAccount" key in local.settings.json and press F5. Then

open then queue in the azure portal, and put in a test message. You should see

it logged to the console. You can set breakpoints and debug like normal.

We’re going to make it a little better by having the function send us a strongly typed object instead of just a string:

public class FileUploadedMessage

{

public string Email { get; set; }

public string FileIdentifier { get; set; }

public string FriendlyName { get; set; }

}

public static class Function1

{

// notice "file-uploaded-queue" is the name of the queue we used in the previous

// sample

[]

public static void Run([QueueTrigger("file-uploaded-queue", Connection = "StorageAccount")]FileUploadedMessage message, TraceWriter log)

{

log.Info($"C# Queue trigger function processed: {message.FileIdentifier}");

}

}Azure will automatically serialize a json message into an instance of the

FileUploadedMessage class.

Downloading the file as a string for processing

private static string GetFileContents(FileUploadedMessage msg)

{

var storageAccount = CloudStorageAccount.Parse(

CloudConfigurationManager.GetSetting("StorageAccount"));

var blobClient = storageAccount.CreateCloudBlobClient();

var container = blobClient.GetContainerReference("uploaded-files");

var blob = container.GetBlockBlobReference(msg.FileIdentifier + ".txt");

return blob.DownloadText();

}Sending an email with SendGrid

We opted to use SendGrid templates so that we could modify the email content without redeploying the app.

Our template also included some tiny text at the bottom to mark the file

identifier, so that if a user had an issue we would know what file was uploaded.

We set up a placeholder as <%TransactionId%> inside the text.

public void SendSuccessEmail(string email, byte[] pdfContent, Guid identifier)

{

var client = new SendGridClient(CloudConfigurationManager.GetSetting("SendGridApiKey"));

var message = new SendGridMessage();

message.From = new EmailAddress("noreply@example.com");

// set up the delivery address

message.AddTo(email);

// add a PDF attachment as base-64 encoded string

message.AddAttachment(attachment.Name, Convert.ToBase64String(pdfContent), "application/pdf");

// Get the template id from SendGrid

message.TemplateId = "582cb986-758b-436d-b9fe-5eb2c3f402ad"

// You can add placeholder replacements

message.AddSubstitution("<%TransactionId%>", identifier.ToString("N"));

_client.SendEmailAsync(message).Wait();

}